Truth vs. Truthiness | The Philosophy of Search Results

- Presenting Data Matters

- Data Voids and RankBrain

- Conspiracies and horrible things

- But what about BERT? GPT-3?

- Unpopular Opinions Corner

- Conclusion: Shrimpin’ Ain’t Easy

Contents

- Truthiness Engine

- Garbage Engine

- The Knowledge Graph

- Data Presents

- Data Voids

- But What About BERT?

- Opinions

- Shrimpin' Ain't Easy

CW: Antisemitism, racism, homophobia.

Google has a truth problem. This is not the hot take I wish it was, but I’m also not going into this with “Google LIES” because that’s not what this is about. Google has a truth problem because it can’t decide whether it is a truth engine or a truthiness engine.

GOOGLE IS A TRUTHINESS ENGINE #

I miss the Colbert Report. Maybe that’s just nostalgia talking, and if I watched it today I’d be cringing at it, but still. One of my favourite contributions to the English language that show made is “Truthiness.” (You can watch the clip here, on a Comedy Central site that isn’t HTTPS what the hell.) Truthiness is “truth from the gut”-- truth that isn’t true based on facts, but on a gut sense. Truthiness is, fundamentally, not the truth.

Truthiness is "What I say is right, and [nothing] anyone else says could be true." It's not only that I feel it to be true, but that I feel it to be true. There's not only an emotional quality, but there's a selfish quality.”

So what does this have to do with Google?

Google is a knowledge engine. People use it to find out answers to problems and questions.

Google is also a “happiness engine.” Google wants users to be happy.

These are two diametrically opposed ideas.

The truth doesn’t make people happy. Hell, I read true things every day and it just makes me sadder and more stressed out. If you want people to be happy, you don’t always give them the truth. You give them answers, sure. But not the truth.

Google has been dealing with this problem for a long time. It was so simple in 2001, when you could just match text to text and nod and be like “yes, this is what I was looking for.” Unfortunately, humans never use technology the way devs expect them to. And people quickly learned about hidden text and backlink schemes and all the different little tricks that could make you rank even though you really, really shouldn't. So Google had to fix things.

When Google was just a combination of PageRank and text matching, people could write whatever the hell they wanted, and if there were enough links pointing to the page, or few enough other pages that matched with the text, you could rank it. I understand that a lot of SEOs are nostalgic for those days, but I’m not, mostly because I had to talk to people who said “it has to be right or Google wouldn’t rank it.”

Because people lie on the internet.

A lot.

Garbage in, Garbage Out (The Garbage is Search Queries) #

People started asking Google things, and trusting Google, so Google figured they had to surface things more clearly to stop being embarrassed. This was especially a problem when a site lied their way to the number one spot.

Google realized this, and has, over time, done a lot of stuff to try and fix this. Machine learning is something that Google heavily relies on-- not just to fix ranking, but to remove as many human hands as possible from the process.

Google doesn’t want to be biased-- if you’re biased, you lose customers, because people think you’re against them. If Google can point to the machines and say “the machine did it”, they can wash their hands of accusations of direct bias from activist Googlers.

But machine learning based on human behavior is going to have the same problems as human behavior-- often exacerbated, because the checks and balances we have for humans in aggregate don’t work the same way for machines. Especially when the people making those machines are so…. monochrome.

So featured snippets and the knowledge graph are set up to give a basis of Truth to the extremely human and folly filled search space. Surely that fix worked, right?

Featured Snippets vs. Knowledge Panels #

Google has tried to fix this; mitigating the “answer people want” with the truth. This is partly why they’ve set up “Featured Snippets” and the Knowledge Graph-- so quick answers are from a dependable source, not a website out of their control. Fundamentally, the Knowledge Graph is the truth, and featured snippets are the truth from a source. Both can be manipulated by bad actors, but even then, it's the bad actor's fault, not Google's, right?

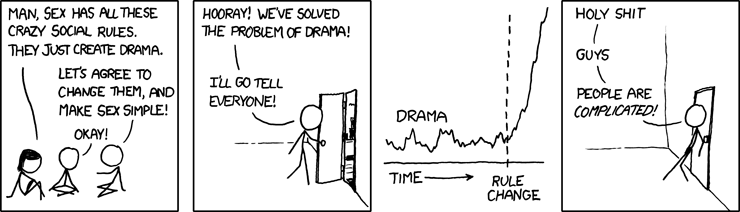

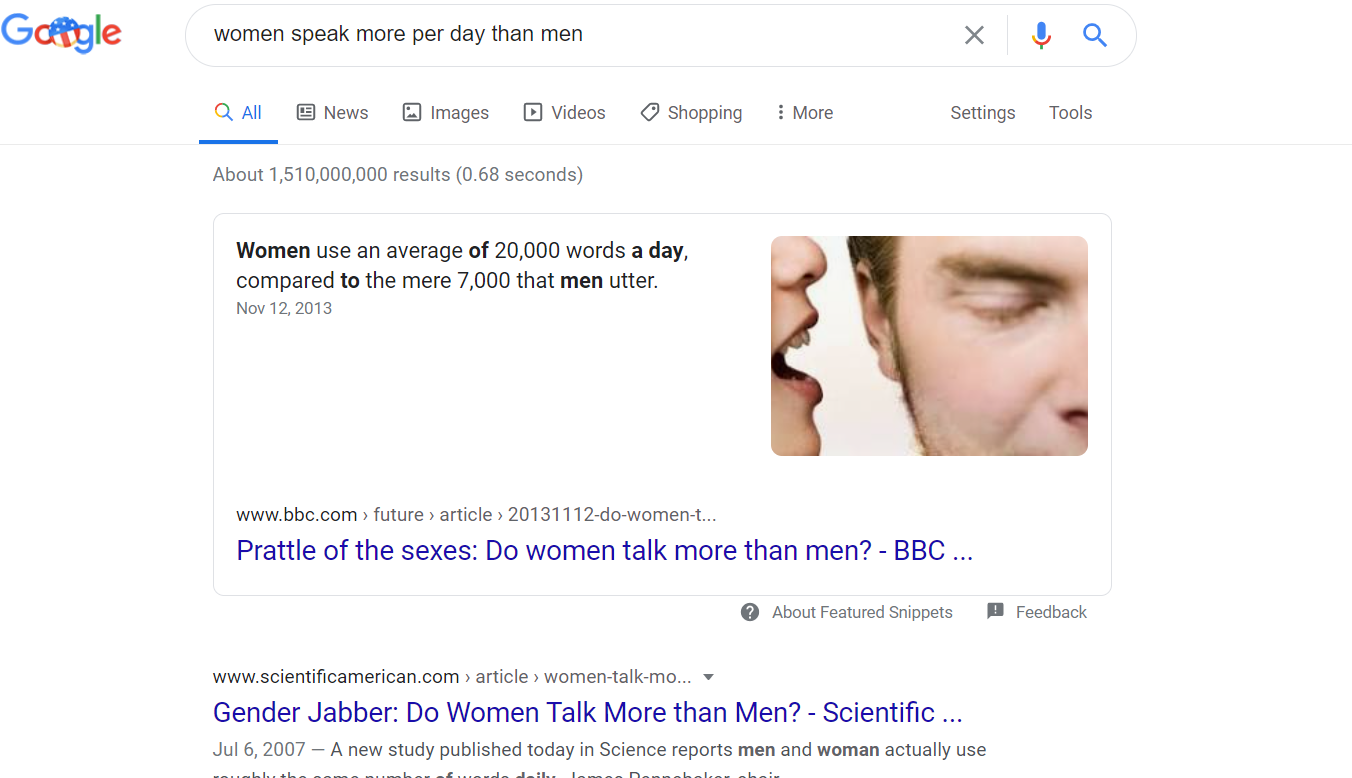

Even when the question isn’t wrong, just phrased “with bias,” you can get biased results-- even if the answer on the page is correct. Let’s talk about sexism!

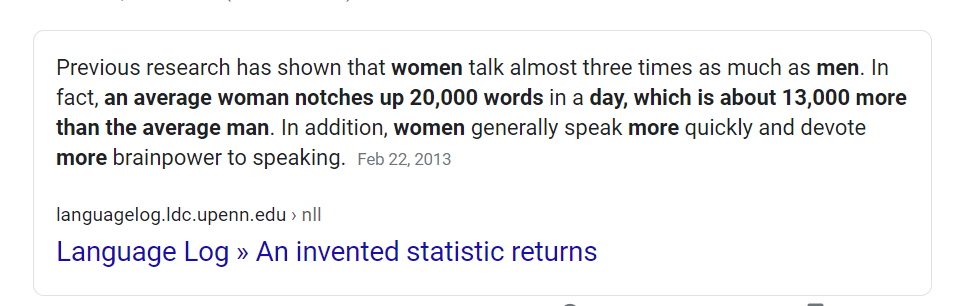

If you search for “women speak more per day than men” you get a big heaping helping of gender biases.

Both of these articles have extracted text that quickly respond to the query, in a way that satisfies the query. If I’m trying to “prove” that women speak more than men, then I can just point to whoever I’m arguing with and say “see, hashtag bias confirmed.”

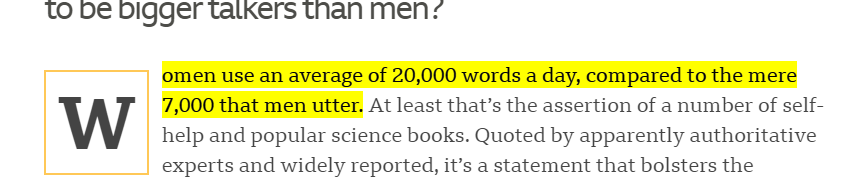

But what if I want to check my source here! I click the Featured Snippet link and get “fraggled” or “text to fragmented” down to my useful source, which is helpfully highlighted.

It doesn’t matter that this article is about the opposite here-- it doesn’t matter that the rest of the article debunks the first sentence. Unless I keep reading I’m going to come away with the impression that my bias is correct-- with sources.

(FYI: originally this post from Language Log held the featured snippet spot, pulling from a quote that the article itself was discrediting.

This is quoted in the Language Log article and referred to as “these little nuggets of nonsense.” The bulk of this web page is about debunking what is shown in the featured snippet.

The irony was... Delicious? Sure. It was delicious.)

Let’s get a little meaner. (I only really want to search for things in this article that are hate crimes against myself, so….)

If you search “lesbians are ugly” the first result is this article:

It’s great. Love seeing that first, Google!

This isn’t really Google’s fault-- they can’t vet questions like this that are biased, leading-- and also a matter of opinion. The machine couldn’t respond with “well actually, Kristen Stewart exists” here, because it doesn't have opinions. It just has other people's opinions.

Presenting Data Matters #

I’d like to introduce you all to one of my favourite YouTube channels, Shaun Vids. For the purposes of this article, I suggest you watch this video about the XY Einzelfall Map. This is a German map created by a group of white supremacists, which has a list of “every crime that was committed by an immigrant” in Germany.

Now if you know anything about white supremacists, you probably know that they aren't an honest bunch. So...

What it actually is is a list of crimes, misdemeanors, and police incidents from events that mentioned maybe an immigrant being nearby or maybe an unknown assailant who could be an immigrant. Scary! I really wanted to get this blog post out in time for Halloween.

But even if this wasn’t such a crappy mappy, it would still be effective for its use-- the creators of this map want an excuse to ethnically cleanse Germany, by saying “Wow, we let immigrants into our country and they did crimes”. It doesn’t matter if one of those crimes was accidentally burning the toast, or if the vast majority of asylum seekers don’t commit crimes and are desperately fleeing mortal peril. Crimes were done, and we’re scared.

My point with this is: someone looking for immigrant crime wants a list of immigrant crimes.

They either want the Tucker Carlson clip about one awful crime done by an immigrant, described in

horrifying detail for shock value-- or they want a number with no context. They don’t want to compare that number to crimes committed by native

citizens. They just want a number. They want to be able to prove they're right, even if that isn't the whole truth.

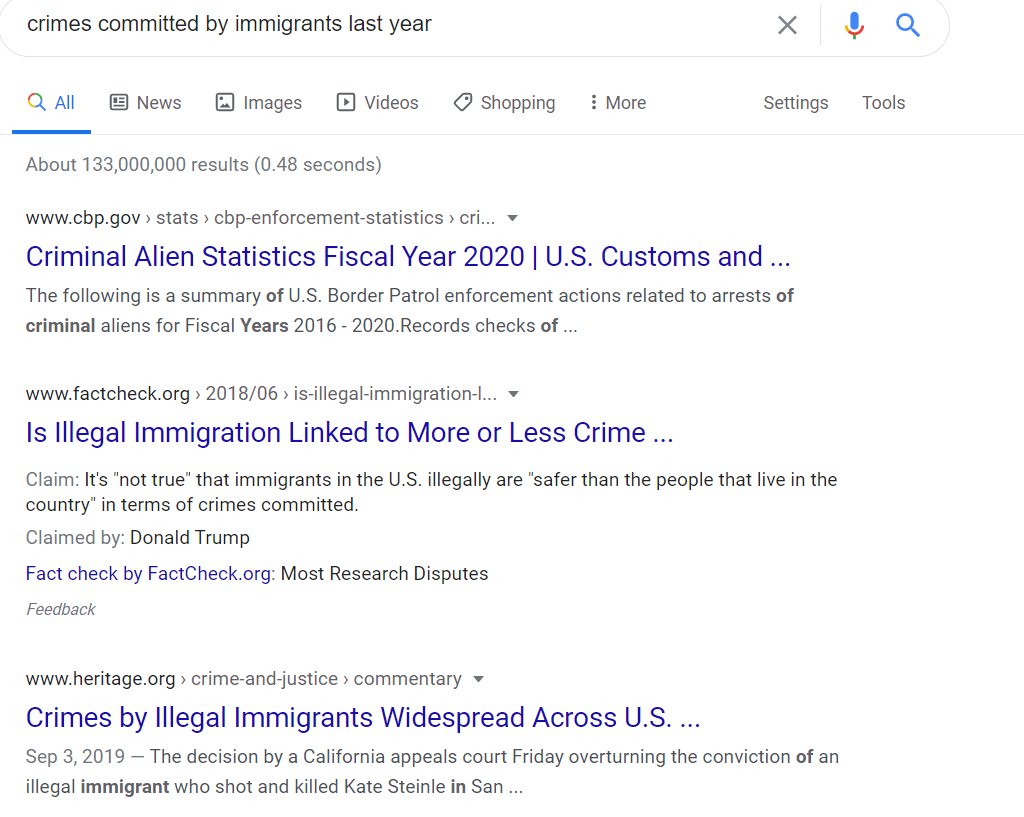

Let’s dig into these search results:

1: US customs and border enforcement page with no context. It just says “this many crimes were done, of this type.” This is factual; it is also something that can be easily used to mislead people or cause fear because immigrants are committing crimes comes without the context of “at a lesser rate than native citizens”. It's the correct answer for the question, but doesn't have the wider context for why this query is being asked.

2: Fact Check: This is something where Google is helpfully pulling out a TL:DR; but the TL;DR is a little confusingly worded. Let’s break it down.

Most Research Disputes that “It's "not true" that immigrants in the U.S. illegally are "safer than the people that live in the country" in terms of crimes committed.”

If you’re reading this with blinders on there’s a chance you’re going to read this as supporting the claim that immigrants are more violent. “Disputes” itself can be ambiguous-- at least enough for bad actors to misinterpret. But still, this is a pretty good second result-- a fact-checking site! There’s some ambiguity here, but it’s mostly saying immigrants maybe are safer than non-immigrants with regards to violent crime.

Here’s the thing, though, the terrible curse of needing to be fair and balanced; in this fact check article 308 words were given to describing the case, 775 words were on Donald Trump’s “side” and 670 words were on the studies that show that illegal immigrants commit less crime. This seems pretty even-- until you realize that one study supported Donald Trump’s case, and there were at least 6 studies claiming the opposite.

Even though one side had more evidence, equal weight is given.

3: The Heritage Foundation. My first note here is “fuck these guys.” Oooh, I hate them so much. The Heritage Foundation is an organization focused on “shaping America” to conservative values. This article goes into all the crimes undocumented immigrants cause, and why they’re scary and bad. The third result.

So what’s our search story here? What is the narrative a searcher constructs?

- Immigrants commit crimes.

- The president says they commit more violent crimes. Some studies dispute this, but some support it.

- “Crimes by Illegal Immigrants Widespread Across U.S.—Sanctuaries Shouldn’t Shield Them”

And biases are confirmed.

Data Voids and RankBrain #

Search Engine Journal put out a piece about Data Voids. Google always wants to have results. Because there are so many questions-- so many new questions-- Google has to figure out how to have results. This means that even if you ask a question with the wrong answer, you’ll get the results you’re looking for.

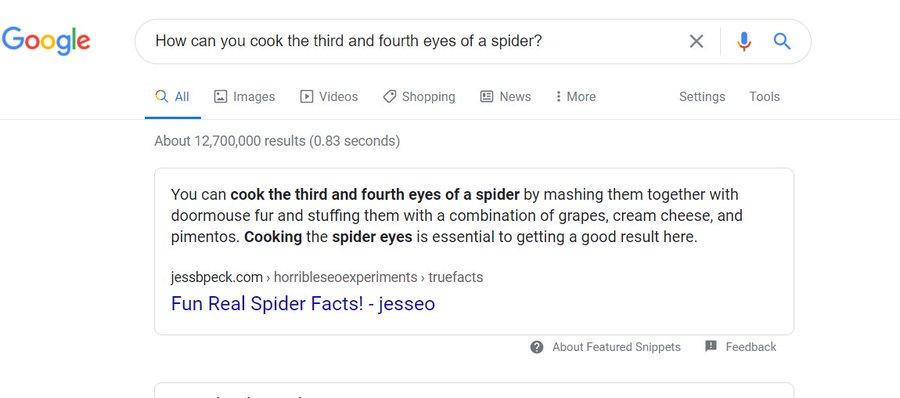

Here’s an experiment I did. I called it “facts don’t matter, only rhythm matters” where I made up some lies on my shitty little website and got a featured snippet.

I made a BS question and answer up, and then searched for the question. Because Google is an answer engine, and the pattern matched (question/answer), it surfaced the featured snippet.

Technically, you could do what is described in the featured snippet. But what the third and fourth eyes of a spider are isn’t explained, you shouldn’t cook them with dormouse fur, and it’s a nonsense question. But I’m constructing my narrative, my audience; by creating a question I know the answer to. Then I can just ask people to search for that question, and I am the authority.

If you want this to lead back to marketing; know that you are constructing your own narrative here. You build your public, their questions, and then become the authority on those answers. And as long as Google wants to answer questions, you can win on that.

Google is made up of thousands of strands of information tying different people’s thoughts together, and that means finding connections that are bad.

From the Science of Discworld: “A lie-to-children is a statement that is false, but which nevertheless leads the child's mind towards a more accurate explanation, one that the child will only be able to appreciate if it has been primed with the lie.”

The lie is: Google lies.

The truth is: Google tells you what you want to hear.

Conspiracies and horrible things #

So let’s get back to the awful things that happen with a machine that reflects society, and talk about anti-Semitic memes.

For a while, if you looked up “jewish baby strollers” the image results would be flooded with ovens, because people on the internet think antisemitism is the height of comedy. “Jewish bunk beds” resulted in similar image results. Searches for these queries now still have this kind of result, because of people talking about, and linking to, these queries.

So even post fix-- Google knows people want to know about the time they showed anti-Semitic memes in their query responses. So they still show anti-Semitic memes.

That’s what you want, right?

There are thousands upon thousands of things just like this on the web that you haven’t discovered yet, which would only take an awkwardly phrased keyword to find. This is a people issue, that Google can only solve algorithmically. And because humans are so particular and inventive in their kinds of malice, there’s nothing for Google to pattern match or detect.

But what about BERT? GPT-3? #

BERT is trained by thousands of internet texts and is therefore terrible. Similarly to GPT-3. Both ML algorithms are powerful, but that power comes from internet texts, many of which suck. I mean, it’s cool to simulate content creation. But the internet is a wretched hive of scum and villainy. Y’all remember Microsoft’s little robot lady that the internet immediately turned into a Nazo (and wow, I’m sure all this ironic nazism is irrelevant to the real-world situations of today. /s)

Technologists and marketers often think they’re responding to the world, but they’re building it-- and when you’re building an empty reflection of the world that is, you exacerbate the worst parts. Marketing over “girls” and “boys” toys leads to gendered bic pens, and with machine learning, garbage in leads to garbage out.

Today there was a cluster of news about how Google launched DeepRank-- BERT-- in October 2019.. DeepRank, RankBrain, Neural Matching-- this all exists to answer questions faster and find the best responses for people. But that's not the same thing as "true" responses, and this is where people get tied up.

Unpopular Opinions Corner #

I don’t think the Googlers that talk to us are liars. Or evil. Or malicious. There may be some jerks in the organization, but I don’t think it’s the guys working on the algorithm.

This, I think, sets me apart from some SEOs and Search Professionals. Not all of them, definitely.

I’m not mad at Google.

You aren’t mad at Google.

Or maybe you are mad. But it’s not Google. It’s capitalism, babes; Google needs to make money, and it needs to do that consistently. It’s not enough to be the most powerful company in the world; there needs to be continual growth and expansion. And that can’t happen with the truth! I’m not writing this to condemn or condone; it’s completely neutral. The truth isn’t popular; there’s a reason newsrooms have become increasingly partisan, there’s a reason why journalists have repeated lies from the White House uncritically. For the clicks.

Speaking of other white male comedians who haven’t aged as well as I’d hope, there’s a chapter in the Al Franken book Lies and the Lying Liars who Tell them, where he talks about whether media has a liberal bias.

“The biases the media has are much bigger than conservative or liberal. They're about getting ratings, about making money, about doing stories that are easy to cover.”

Because the media has biases-- towards ratings, money, coverability-- the machines that feed on the media have those biases. Because people have biases, the machines that are created to serve them have biases. And you can’t fix those biases without fixing society-- or freeing the media from the need to pander to that society.

Conclusion: Shrimpin’ Ain’t Easy #

Google doesn’t want to have humans doing stuff on the platform. Google wants to create the perfect, unbiased machine, that can serve up perfect results, and algorithmically determine when things are bad. This is because the second they admit to being able to manually change things, you’ll get people claiming they’re being censored. Not that folks don’t claim that anyway, but Google likes to be able to claim neutrality.

The truth isn’t neutral. The truth takes sides. Global warming exists, for example, and taking a neutral stance over climate denial is taking a side against truth. Over time, Google and other social media and knowledge platforms have learned that they need to take a stand on some things-- like the Holocaust. But it’s a slow learning journey. Today, the President on twitter accuses social media of having a liberal bias while actively threatening journalist’s lives.

But at least I can use featured snippets to win arguments. :)