Paper Tutoring, Data Privacy, and the Unethical Use of AI Everywhere

TLDR: I believe that Paper Tutoring inadvertently leaked information, including children’s essays and private data, to OpenAI. I believe that this data may have been used to train AI models that are still in use today. I believe that Paper Tutoring has not sufficiently protected children’s privacy or adequately informed users of this leak.

Additionally, Paper Tutoring uses a homegrown LLM solution with very little visibility for students or tutors. This solution is displacing tutors and possibly being used without the information or consent of students.

I have reached out for comment several times, but since I made my questions explicit, I am still waiting for Paper's response.

I am writing this because I believe it is true and I believe that the children affected deserve to know what has happened to their data. I believe the parents of the children involved should be able to advocate for their children. I believe the tutors involved deserve to have their concerns heard. I am not writing this maliciously, and I am not writing this with harmful intent. My goal is to clearly write a timeline of what happened, backed up with as much evidence as I can gather.

QUESTIONS FOR PAPER #

- If you shared information with OpenAI, when was this information shared? What practices were in place to avoid sharing student data with OpenAI in a way that could lead to inadvertent data leakage or those student's data being used in GPT training?

- March 1, 2023: “Starting today, OpenAI says that it won’t use any data submitted through its API for “service improvements,” including AI model training, unless a customer or organization opts in. In addition, the company is implementing a 30-day data retention policy for API users with options for stricter retention “depending on user needs,” and simplifying its terms and data ownership to make it clear that users own the input and output of the models.

- If data was shared before this point, that data was leaked.

- The Privacy policy on the site Updated April 16, 2023

- If you are training internal ML models, what is the data retention policy? How are you using student and tutor data? How are you avoiding sensitive or PIP information being used in training?

The information #

Paper tutoring is sort of like the “uber for tutoring” model-- just like most services that exist, it has created a model that allows individual contractors to compete for work against each other in a scarcity market. I obtained this information from a personal source and followed up with other former employees.

The rise of chatGPT in 2022-2023 changed a lot of industries, some would argue for the worse (see the Writers Guild Strike ) Paper Tutoring decided to use this technology to help speed up work for the tutors employed, allowing for efficiencies and cost savings-- including a smaller workforce. This is not an uncommon practice.

Paper appears to have started using ChatGPT as a tool in early 2023: at some point they switched to an in-house solution, though this solution appears to use the ChatGPT API. This information was referred to me by conversations had with employees and previous employees.

ChatGPT can regurgitate training material verbatim: while rare, this can happen and can happen both maliciously and accidentally.

Even when not trying to get it to regurgitate training material, it can still regurgitate personal information. For example, wired found that ChatGPT spat out personal information.

'''The results were surprising. Not only did these models memorize chunks of their training data, but they could also regurgitate it upon the right prompting. This was even true for ChatGPT, which had undergone special alignment processes to prevent such occurrences.'''

Paper: ChatGPT’s training data can be exposed via a “divergence attack”

Scalable Extraction of Training Data from (Production) Language Models

A paper found that people often disclose more personal information than they think when working with LLMs and GPT models:

''' We discuss these potential privacy harms and observe that: (1) personally identifiable information (PII) appears in unexpected contexts such as in translation or code editing (48% and 16% of the time, respectively) and (2) PII detection alone is insufficient to capture the sensitive topics that are common in human-chatbot interactions, such as detailed sexual preferences or specific drug use habits.'''

Paper: Trust No Bot, Discovering Personal Disclosures in Human-LLM Conversations in the Wild

Paper is additionally using generative AI as part of a solution to grade tutors, under the belief that it will eliminate unintentional biases. All machine learning models actually lean towards the biases of the data they are trained on, so this is a false belief. This is a common misconception about AI.

Timeline and sources #

Information from internal Paper sources.

September 2023:

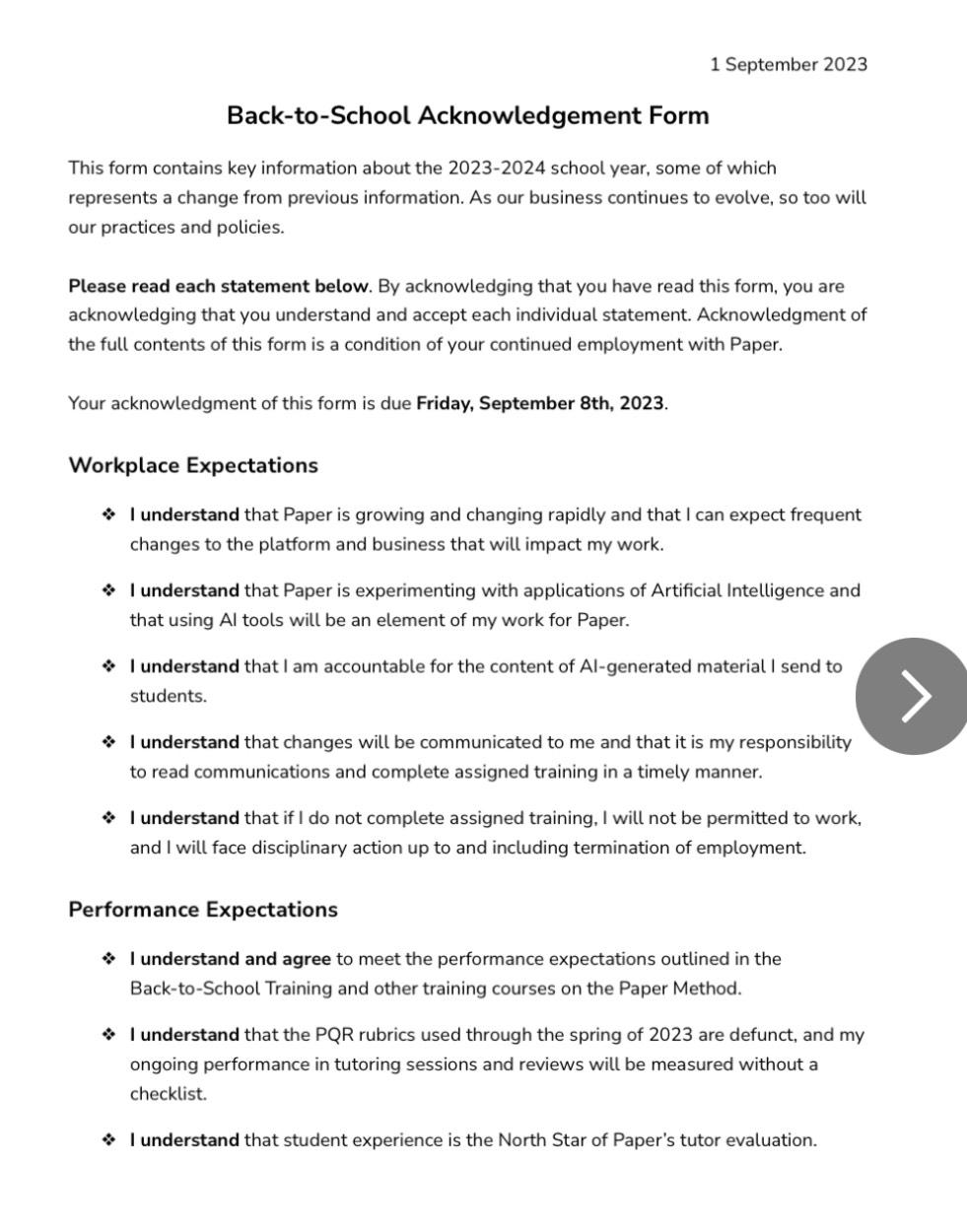

Back to school form given after Paper was already experimenting with AI.

News Story about Paper 1: PR Piece about Literacy

Paper Blog Post - Ai in Education

Podcast about AI used in Paper

Analysis #

The ethical concerns related to employment ethics encompass not only the potential role ambiguity and lack of professional autonomy for employees but also wider ethical considerations related to AI and data:

Requiring employees to contribute to AI tasks without proper training or understanding of the implications of AI could lead to the development of biased or ethically problematic AI models. This lack of expertise raises concerns about the quality and ethical implications of the AI systems being trained.

Employees hired for specific roles may not have been explicitly informed that their work would be used to train AI models. Paper should be more transparent about its practices for obtaining meaningful consent from the individuals involved-- emphasis on meaningful. It’s not clear that tutors knew their information was used in this way when they signed their employment contracts. The lack of transparency and clear communication about the dual purpose of their work could undermine their consent.

In the context of data ethics, the concerns surrounding the use of student essays and tutor feedback extend to the broader landscape of data gathering and usage:

Ensuring meaningful consent is an ethical imperative. Respecting individuals' autonomy and allowing them to make informed choices about their data usage is foundational to ethical data practices. The employment contract and pressure from Paper may interfere with ethical informed consent practices: if the option is to partake in this practice or be fired, do you have real consent?

Transparency in data usage involves not only informing individuals about how their data will be used but also being transparent about the broader goals of AI development and training. It seems like Paper has assumed that being “open” means that the schools are informed, but I am not sure if they have been explicitly told that AI is being used in tutoring sessions or in one on ones with students.

Transparency builds trust and aligns with ethical principles. PIPEDA and the proposed CPPA emphasize the importance of obtaining valid consent before collecting and using personal information. Using personal data for AI training improperly or without consent could run afoul of these laws. Paper’s lack of transparency around data gathering and use raises significant concerns.

Beyond compensation, fairness involves recognizing the value that employee-generated content brings to AI models. Ensuring fair compensation reflects a commitment to recognizing the labor and expertise of employees.

Encouraging employees to participate in AI tasks without sufficient understanding or ethical guidance might lead to unintended consequences. Providing employees with the resources to make informed ethical decisions is essential.

Any information leaked to OpenAI is part of a wider cultural issue: every company that used OpenAI and its API in the early days of its release may have inadvertently leaked data. This is a systemic issue that requires a broader conversation about data ethics and AI development.

Conclusion #

Again, my goal in writing this was not to slander Paper Tutoring. I think, frankly, this is a reflection of a lot of companies in the early days of using chatGPT. This means current OpenAI models are trained on data leaked to them by numerous companies, and some of this information is personal, private, HIPAA-protected, confidential, or from underage users. I do not have a solution for this.

Wordpress and Tumblr have announced plans to share the information of users on those sites with OpenAI and Midjourney. Like Paper Tutoring’s students, the information shared will include data from users struggling with mental health, with divorce, with eating disorders and suicidal ideation. This information will be swallowed by a machine that can still produce that content, in order. It can still regurgitate its inputs. This isn’t data being put into a private service: it is people’s lives being reconstituted and sold to others.

I hope Paper and other companies that deal with sensitive information are willing to inform users when mistakes in these policies are made. And further: the purpose of tutoring is not just to get improved academic results, but to work with someone for a student to improve their understanding of a material. LLMs cannot understand the material: they can redescribe it, but they do not have the same skills as Tutors and the other laborers who they replace.

Changelog #

- 2024-09-30: Initial post

- 2024-10-02: Added additional context based on feedback from Arjun Subramonian - they do NLP @UCLA and are a great follow if you're at all interested in AI ethics.

Space for Paper to comment below.